Introduction to Impedance Matching in RF Systems

Impedance matching is a crucial concept in radio frequency (RF) design that ensures maximum power transfer and minimal signal reflections between a source and a load. In RF systems, the most commonly used characteristic impedance is 50 ohms. This article explores the historical and technical reasons behind the widespread adoption of 50 ohms as the standard impedance value in RF design.

What is Impedance Matching?

Impedance matching is the practice of designing the Input Impedance of an electrical load or the output impedance of a signal source to maximize the power transfer and minimize reflections from the load. When the impedance of the source and the load are equal, maximum power transfer occurs, and signal reflections are minimized.

Why is Impedance Matching Important in RF Design?

In RF systems, impedance mismatches can lead to several problems, such as:

- Reduced power transfer efficiency

- Signal reflections and standing waves

- Increased signal distortion and noise

- Damage to RF components due to high voltage standing wave ratio (VSWR)

Proper impedance matching ensures that the RF system operates efficiently and reliably, minimizing signal loss and distortion.

Historical Background of 50 Ohms Impedance Standard

The adoption of 50 ohms as the standard characteristic impedance in RF systems has its roots in the early days of radio communication. In the 1930s, the development of coaxial cables for radio transmission played a significant role in establishing 50 ohms as the preferred impedance value.

The Role of Coaxial Cables in RF Transmission

Coaxial cables consist of an inner conductor surrounded by an insulating dielectric material and an outer conductor (shield). The geometry and properties of these components determine the cable’s characteristic impedance. Coaxial cables offer several advantages for RF transmission, including:

- Shielding against electromagnetic interference (EMI)

- Low signal attenuation

- Consistent characteristic impedance over a wide frequency range

The Development of 50 Ohm Coaxial Cables

In the early days of radio communication, various characteristic impedance values were used for coaxial cables, ranging from 30 ohms to 120 ohms. However, two main factors led to the eventual standardization of 50 ohms:

-

Power handling capability: Lower impedance values allow for higher power transmission without increasing the cable’s diameter significantly.

-

Attenuation: Higher impedance values result in higher cable attenuation, which limits the transmission distance and signal quality.

In the 1930s, Bell Labs conducted extensive research on coaxial cable design and determined that 50 ohms offered the best balance between power handling capability and attenuation. This finding led to the development of the RG-58 coaxial cable, which became widely used in military and commercial applications.

Technical Factors Contributing to 50 Ohms Standardization

In addition to the historical reasons, several technical factors have contributed to the widespread adoption of 50 ohms as the standard impedance value in RF design.

Optimal Power Handling and Attenuation

As mentioned earlier, 50 ohms offers a good balance between power handling capability and attenuation in coaxial cables. The power handling capability of a coaxial cable is determined by its peak voltage rating, which is limited by the dielectric breakdown strength of the insulating material.

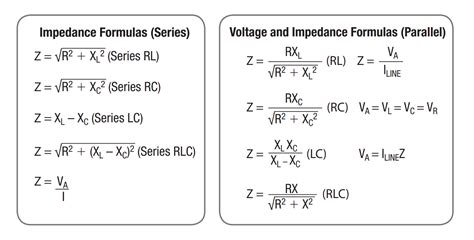

The characteristic impedance (Z₀) of a coaxial cable is given by the following equation:

Z₀ = (138 / √ε) × log₁₀(D / d)

Where:

– ε is the dielectric constant of the insulating material

– D is the inner diameter of the outer conductor

– d is the outer diameter of the inner conductor

Lower impedance values require a smaller ratio of D/d, which increases the cable’s capacitance and reduces its peak voltage rating. On the other hand, higher impedance values result in a larger D/d ratio, which increases the cable’s inductance and attenuation.

The table below compares the power handling capability and attenuation of coaxial cables with different characteristic impedance values:

| Impedance (Ω) | Power Handling | Attenuation |

|---|---|---|

| 30 | High | Low |

| 50 | Medium | Medium |

| 75 | Low | High |

| 100 | Very Low | Very High |

As evident from the table, 50 ohms provides a balance between power handling capability and attenuation, making it an optimal choice for most RF applications.

Compatibility with Connectors and Components

Another factor contributing to the standardization of 50 ohms is the compatibility with existing connectors and components in RF systems. Many RF connectors, such as BNC, SMA, and N-type, are designed for 50-ohm impedance matching. These connectors have become widely used in various RF applications, further reinforcing the use of 50 ohms as the standard impedance value.

Additionally, many RF components, such as amplifiers, filters, and antennas, are designed to work with 50-ohm systems. By maintaining a consistent impedance value throughout the system, designers can ensure proper matching and minimize the need for additional matching networks.

Ease of Impedance Matching and Bandwidth Considerations

Impedance matching is essential for maximizing power transfer and minimizing signal reflections in RF systems. When the source and load impedances are equal, maximum power transfer occurs, and signal reflections are minimized.

In practice, achieving perfect impedance matching over a wide frequency range can be challenging. However, 50 ohms has proven to be a convenient value for impedance matching due to the availability of various matching techniques and components, such as:

- Quarter-wave transformers

- Stub matching networks

- Pi and T matching networks

- Lumped element matching networks

These matching techniques can be easily implemented using 50-ohm components, making it easier for designers to achieve broad bandwidth impedance matching.

Moreover, 50 ohms falls within the range of impedance values that can be easily realized using microstrip and stripline transmission lines on printed Circuit Boards (PCBs). This compatibility with PCB Fabrication techniques has further contributed to the widespread use of 50 ohms in RF design.

Exceptions to the 50 Ohm Standard

While 50 ohms is the most common characteristic impedance in RF systems, there are some exceptions to this standard. In certain applications, other impedance values may be used for specific reasons.

75 Ohm Systems in Cable Television and Video Applications

In cable television (CATV) and video applications, 75 ohms is often used as the characteristic impedance. This choice is primarily motivated by the need for lower attenuation over longer distances, as CATV systems require the distribution of signals over extensive cable networks.

The use of 75 ohms in video applications also has historical reasons. Early television systems used 75-ohm twin-lead transmission lines due to their lower cost and better impedance matching with the high-impedance vacuum tube amplifiers used at the time.

High Impedance Systems in Low Power Applications

In some low power RF applications, such as wireless sensor networks and IoT devices, higher impedance values (e.g., 100 ohms or more) may be used to reduce power consumption and extend battery life. Higher impedance systems require less current to achieve the same power level, which can be advantageous in power-constrained environments.

However, higher impedance systems may face challenges in terms of Signal Integrity, noise susceptibility, and impedance matching over wide bandwidths.

FAQ

1. Why is 50 ohms the most common impedance value in RF systems?

50 ohms has become the standard impedance value in RF systems due to its balance between power handling capability and attenuation in coaxial cables, compatibility with existing connectors and components, and ease of impedance matching over wide bandwidths.

2. What are the advantages of using coaxial cables for RF transmission?

Coaxial cables offer several advantages for RF transmission, including shielding against electromagnetic interference (EMI), low signal attenuation, and consistent characteristic impedance over a wide frequency range.

3. How does the characteristic impedance of a coaxial cable affect its power handling capability and attenuation?

Lower impedance values allow for higher power transmission without increasing the cable’s diameter significantly, while higher impedance values result in higher cable attenuation, which limits the transmission distance and signal quality.

4. What are some common impedance matching techniques used in 50-ohm RF systems?

Common impedance matching techniques used in 50-ohm RF systems include quarter-wave transformers, stub matching networks, Pi and T matching networks, and lumped element matching networks.

5. Are there any exceptions to the 50-ohm standard in RF design?

Yes, there are some exceptions to the 50-ohm standard. In cable television (CATV) and video applications, 75 ohms is often used for lower attenuation over longer distances. In low power RF applications, such as wireless sensor networks and IoT devices, higher impedance values may be used to reduce power consumption and extend battery life.

Conclusion

The widespread adoption of 50 ohms as the standard characteristic impedance in RF systems is the result of both historical and technical factors. The development of coaxial cables in the 1930s, particularly the work done by Bell Labs, played a crucial role in establishing 50 ohms as the preferred impedance value. From a technical standpoint, 50 ohms offers an optimal balance between power handling capability and attenuation, compatibility with existing connectors and components, and ease of impedance matching over wide bandwidths.

While there are some exceptions to the 50-ohm standard, such as 75-ohm systems in CATV and video applications and high impedance systems in low power applications, 50 ohms remains the predominant choice in RF design. Understanding the origin and rationale behind this standardization is essential for RF engineers and designers to make informed decisions when developing RF systems and components.

As RF technology continues to evolve, the 50-ohm standard is likely to remain a cornerstone of RF design, ensuring compatibility and interoperability among various components and systems. By leveraging the benefits of this standardization, RF engineers can focus on innovating and pushing the boundaries of wireless communication while building upon a solid foundation of established practices and techniques.

No responses yet